Titanic Survival Prediction using Decision Trees#

Kaggle’s Titanic Machine Learning Challenge#

The Titanic dataset is one of the most popular machine learning challenges on Kaggle. In this analysis, we’ll predict passenger survival using Decision Tree algorithms.

Challenge Goal: Predict which passengers survived the Titanic shipwreck based on features like age, gender, ticket class, etc.

Why Decision Trees?

Easy to interpret and visualize

No need for feature scaling

Handle both numerical and categorical data

Can capture non-linear relationships

Provide feature importance rankings

# Import necessary libraries

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.tree import DecisionTreeClassifier, plot_tree

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split, cross_val_score, GridSearchCV

from sklearn.metrics import accuracy_score, classification_report, confusion_matrix

from sklearn.preprocessing import LabelEncoder

import warnings

warnings.filterwarnings('ignore')

# Set style for better visualizations

plt.style.use('seaborn-v0_8')

sns.set_palette("husl")

1. Data Loading and Initial Exploration#

First, let’s load the Titanic dataset. We’ll use the built-in dataset from seaborn for this analysis.

# Load the Titanic dataset

df = pd.read_csv('train.csv')

# update the column names to be lowercase

df.columns = df.columns.str.lower()

# print the dataset shape

print("Dataset Shape:", df.shape)

print("\n" + "="*50)

print("DATASET OVERVIEW")

print("="*50)

print(df.head())

print("\n" + "="*50)

print("DATASET INFO")

print("="*50)

print(df.info())

print("\n" + "="*50)

print("MISSING VALUES")

print("="*50)

print(df.isnull().sum())

print("\n" + "="*50)

print("BASIC STATISTICS")

print("="*50)

print(df.describe())

Dataset Shape: (891, 12)

==================================================

DATASET OVERVIEW

==================================================

passengerid survived pclass \

0 1 0 3

1 2 1 1

2 3 1 3

3 4 1 1

4 5 0 3

name sex age sibsp \

0 Braund, Mr. Owen Harris male 22.0 1

1 Cumings, Mrs. John Bradley (Florence Briggs Th... female 38.0 1

2 Heikkinen, Miss. Laina female 26.0 0

3 Futrelle, Mrs. Jacques Heath (Lily May Peel) female 35.0 1

4 Allen, Mr. William Henry male 35.0 0

parch ticket fare cabin embarked

0 0 A/5 21171 7.2500 NaN S

1 0 PC 17599 71.2833 C85 C

2 0 STON/O2. 3101282 7.9250 NaN S

3 0 113803 53.1000 C123 S

4 0 373450 8.0500 NaN S

==================================================

DATASET INFO

==================================================

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 12 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 passengerid 891 non-null int64

1 survived 891 non-null int64

2 pclass 891 non-null int64

3 name 891 non-null object

4 sex 891 non-null object

5 age 714 non-null float64

6 sibsp 891 non-null int64

7 parch 891 non-null int64

8 ticket 891 non-null object

9 fare 891 non-null float64

10 cabin 204 non-null object

11 embarked 889 non-null object

dtypes: float64(2), int64(5), object(5)

memory usage: 83.7+ KB

None

==================================================

MISSING VALUES

==================================================

passengerid 0

survived 0

pclass 0

name 0

sex 0

age 177

sibsp 0

parch 0

ticket 0

fare 0

cabin 687

embarked 2

dtype: int64

==================================================

BASIC STATISTICS

==================================================

passengerid survived pclass age sibsp \

count 891.000000 891.000000 891.000000 714.000000 891.000000

mean 446.000000 0.383838 2.308642 29.699118 0.523008

std 257.353842 0.486592 0.836071 14.526497 1.102743

min 1.000000 0.000000 1.000000 0.420000 0.000000

25% 223.500000 0.000000 2.000000 20.125000 0.000000

50% 446.000000 0.000000 3.000000 28.000000 0.000000

75% 668.500000 1.000000 3.000000 38.000000 1.000000

max 891.000000 1.000000 3.000000 80.000000 8.000000

parch fare

count 891.000000 891.000000

mean 0.381594 32.204208

std 0.806057 49.693429

min 0.000000 0.000000

25% 0.000000 7.910400

50% 0.000000 14.454200

75% 0.000000 31.000000

max 6.000000 512.329200

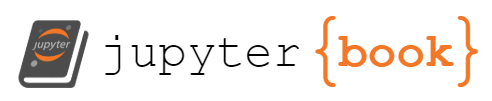

2. Exploratory Data Analysis (EDA)#

Let’s explore the data to understand the relationships between features and survival rates.

# Create a comprehensive EDA

fig, axes = plt.subplots(2, 3, figsize=(18, 12))

fig.suptitle('Titanic Dataset - Exploratory Data Analysis', fontsize=16, fontweight='bold')

# 1. Survival Rate

survival_counts = df['survived'].value_counts()

axes[0, 0].pie(survival_counts.values, labels=['Died', 'Survived'], autopct='%1.1f%%',

colors=['#ff7f7f', '#7fbf7f'])

axes[0, 0].set_title('Overall Survival Rate')

# 2. Survival by Gender

survival_by_sex = pd.crosstab(df['sex'], df['survived'], normalize='index') * 100

survival_by_sex.plot(kind='bar', ax=axes[0, 1], color=['#ff7f7f', '#7fbf7f'])

axes[0, 1].set_title('Survival Rate by Gender')

axes[0, 1].set_ylabel('Percentage (%)')

axes[0, 1].legend(['Died', 'Survived'])

axes[0, 1].tick_params(axis='x', rotation=0)

# 3. Survival by Passenger Class

survival_by_class = pd.crosstab(df['pclass'], df['survived'], normalize='index') * 100

survival_by_class.plot(kind='bar', ax=axes[0, 2], color=['#ff7f7f', '#7fbf7f'])

axes[0, 2].set_title('Survival Rate by Passenger Class')

axes[0, 2].set_ylabel('Percentage (%)')

axes[0, 2].legend(['Died', 'Survived'])

axes[0, 2].tick_params(axis='x', rotation=0)

# 4. Age Distribution by Survival

df[df['survived'] == 0]['age'].hist(bins=30, alpha=0.7, label='Died', ax=axes[1, 0], color='#ff7f7f')

df[df['survived'] == 1]['age'].hist(bins=30, alpha=0.7, label='Survived', ax=axes[1, 0], color='#7fbf7f')

axes[1, 0].set_title('Age Distribution by Survival')

axes[1, 0].set_xlabel('Age')

axes[1, 0].set_ylabel('Frequency')

axes[1, 0].legend()

# 5. Fare Distribution by Survival

df[df['survived'] == 0]['fare'].hist(bins=30, alpha=0.7, label='Died', ax=axes[1, 1], color='#ff7f7f')

df[df['survived'] == 1]['fare'].hist(bins=30, alpha=0.7, label='Survived', ax=axes[1, 1], color='#7fbf7f')

axes[1, 1].set_title('Fare Distribution by Survival')

axes[1, 1].set_xlabel('Fare')

axes[1, 1].set_ylabel('Frequency')

axes[1, 1].legend()

axes[1, 1].set_xlim(0, 200) # Limit x-axis for better visualization

# 6. Survival by Embarkation Port

survival_by_embark = pd.crosstab(df['embarked'], df['survived'], normalize='index') * 100

survival_by_embark.plot(kind='bar', ax=axes[1, 2], color=['#ff7f7f', '#7fbf7f'])

axes[1, 2].set_title('Survival Rate by Embarkation Port')

axes[1, 2].set_ylabel('Percentage (%)')

axes[1, 2].legend(['Died', 'Survived'])

axes[1, 2].tick_params(axis='x', rotation=45)

plt.tight_layout()

plt.show()

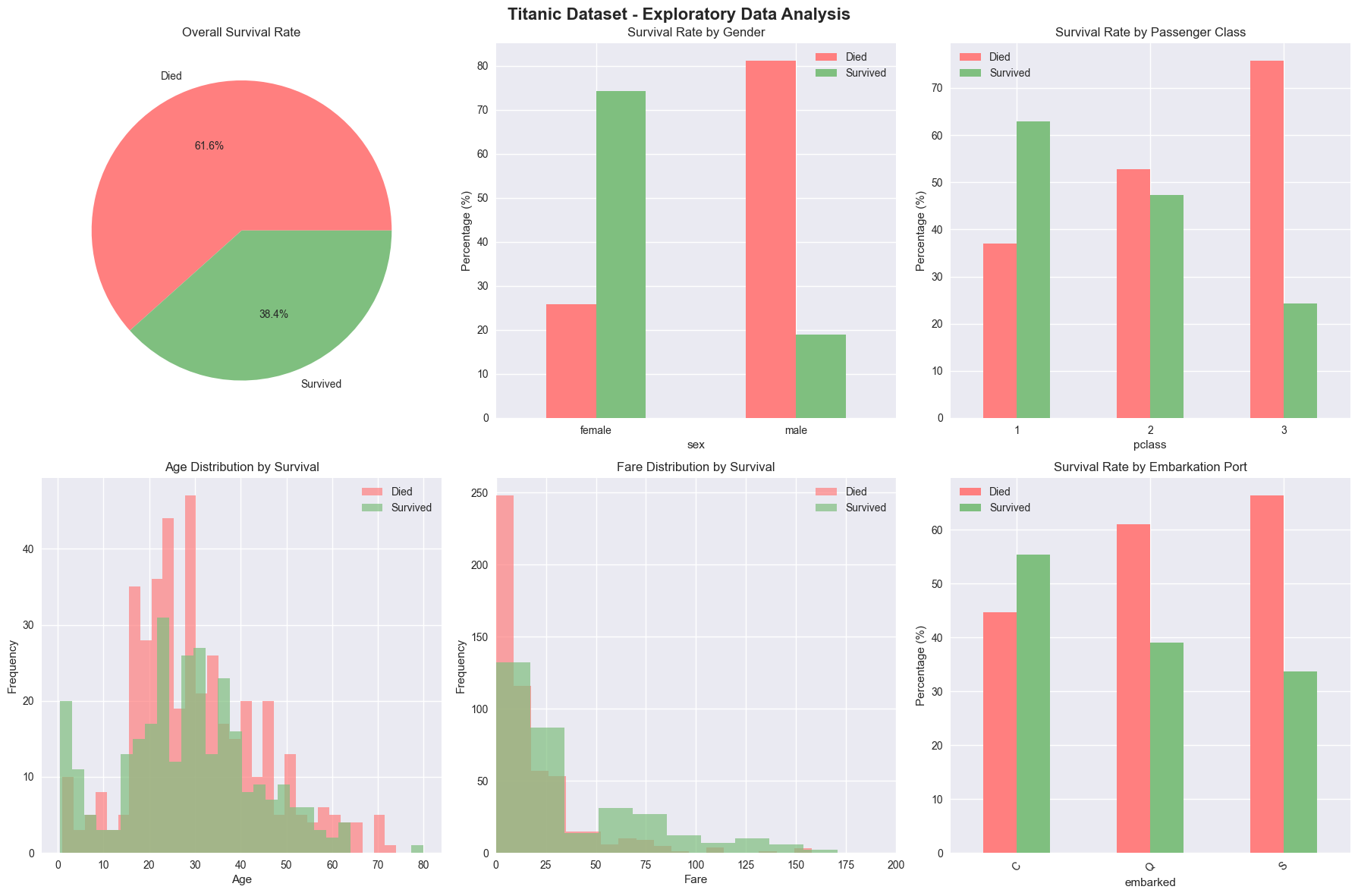

# Create a correlation heatmap

plt.figure(figsize=(12, 8))

# Select numerical columns for correlation

numeric_cols = ['survived', 'pclass', 'age', 'sibsp', 'parch', 'fare']

correlation_matrix = df[numeric_cols].corr()

# Create heatmap

sns.heatmap(correlation_matrix, annot=True, cmap='RdYlBu_r', center=0,

square=True, fmt='.2f', cbar_kws={'shrink': 0.8})

plt.title('Correlation Matrix of Numerical Features', fontsize=14, fontweight='bold')

plt.tight_layout()

plt.show()

# Print key insights

print("KEY INSIGHTS FROM EDA:")

print("="*50)

print(f"• Overall survival rate: {df['survived'].mean():.1%}")

print(f"• Female survival rate: {df[df['sex'] == 'female']['survived'].mean():.1%}")

print(f"• Male survival rate: {df[df['sex'] == 'male']['survived'].mean():.1%}")

print(f"• First class survival rate: {df[df['pclass'] == 1]['survived'].mean():.1%}")

print(f"• Third class survival rate: {df[df['pclass'] == 3]['survived'].mean():.1%}")

print(f"• Children (≤12) survival rate: {df[df['age'] <= 12]['survived'].mean():.1%}")

print(f"• Adults (>12) survival rate: {df[df['age'] > 12]['survived'].mean():.1%}")

KEY INSIGHTS FROM EDA:

==================================================

• Overall survival rate: 38.4%

• Female survival rate: 74.2%

• Male survival rate: 18.9%

• First class survival rate: 63.0%

• Third class survival rate: 24.2%

• Children (≤12) survival rate: 58.0%

• Adults (>12) survival rate: 38.8%

3. Data Preprocessing#

Now let’s prepare the data for our decision tree model by handling missing values and encoding categorical variables.

# Preprocessing function that works for both train and test data

def preprocess_titanic_data(df, is_train=True):

"""

Preprocess Titanic data for both training and test sets

"""

df_processed = df.copy()

# lowercase the column names

df_processed.columns = df_processed.columns.str.lower()

print(f"\n{'TRAINING' if is_train else 'TEST'} DATA PREPROCESSING:")

print("="*50)

print(f"Original shape: {df_processed.shape}")

print(f"Missing values:\n{df_processed.isnull().sum()}")

# 1. Handle missing values

print(f"\n1. Handling Missing Values:")

# Age: Fill with median age by passenger class and gender

for pclass in df_processed['pclass'].unique():

for sex in df_processed['sex'].unique():

mask = (df_processed['pclass'] == pclass) & (df_processed['sex'] == sex)

median_age = df_processed[mask]['age'].median()

if pd.isna(median_age): # If no data for this group, use overall median

median_age = df_processed['age'].median()

df_processed.loc[mask & df_processed['age'].isnull(), 'age'] = median_age

# Embarked: Fill with mode (most common port)

if 'embarked' in df_processed.columns:

mode_embarked = df_processed['embarked'].mode()[0] if not df_processed['embarked'].mode().empty else 'S'

df_processed['embarked'].fillna(mode_embarked, inplace=True)

print(f" • Embarked missing values filled with: {mode_embarked}")

# Fare: Fill with median fare by passenger class

if df_processed['fare'].isnull().any():

for pclass in df_processed['pclass'].unique():

mask = df_processed['pclass'] == pclass

median_fare = df_processed[mask]['fare'].median()

df_processed.loc[mask & df_processed['fare'].isnull(), 'fare'] = median_fare

print(f" • Fare missing values filled with class-specific medians")

# Cabin: Extract deck information

df_processed['deck'] = df_processed['cabin'].str[0] if 'cabin' in df_processed.columns else 'Unknown'

df_processed['deck'].fillna('Unknown', inplace=True)

# Print age processing summary

age_missing_before = df['Age'].isnull().sum() if 'Age' in df.columns else df['age'].isnull().sum()

print(f" • Age missing values filled: {age_missing_before} → {df_processed['age'].isnull().sum()}")

# 2. Feature Engineering

print(f"\n2. Feature Engineering:")

# Family size features

df_processed['family_size'] = df_processed['sibsp'] + df_processed['parch'] + 1

df_processed['is_alone'] = (df_processed['family_size'] == 1).astype(int)

# Age groups

df_processed['age_group'] = pd.cut(df_processed['age'],

bins=[0, 12, 18, 35, 60, 100],

labels=['child', 'teen', 'young_adult', 'adult', 'senior'])

# Fare groups

df_processed['fare_group'] = pd.cut(df_processed['fare'],

bins=[0, 10, 20, 50, 100, 1000],

labels=['very_low', 'low', 'medium', 'high', 'very_high'])

# Title extraction from Name

if 'name' in df_processed.columns:

df_processed['title'] = df_processed['name'].str.extract(' ([A-Za-z]+)\.', expand=False)

# Group rare titles

title_mapping = {

'Mr': 'Mr', 'Miss': 'Miss', 'Mrs': 'Mrs', 'Master': 'Master',

'Dr': 'Rare', 'Rev': 'Rare', 'Col': 'Rare', 'Major': 'Rare',

'Mlle': 'Miss', 'Countess': 'Rare', 'Ms': 'Miss', 'Lady': 'Rare',

'Jonkheer': 'Rare', 'Don': 'Rare', 'Dona': 'Rare', 'Mme': 'Mrs',

'Capt': 'Rare', 'Sir': 'Rare'

}

df_processed['title'] = df_processed['title'].map(title_mapping).fillna('rare')

print(f" • family_size: {df_processed['family_size'].min()} to {df_processed['family_size'].max()}")

print(f" • is_alone: {df_processed['is_alone'].sum()} passengers traveling alone")

print(f" • age_group: {df_processed['age_group'].value_counts().to_dict()}")

print(f" • title extracted from name column")

# 3. Encode categorical variables

print(f"\n3. Encoding Categorical Variables:")

# One-hot encoding for categorical features

categorical_features = ['sex', 'embarked', 'deck', 'age_group', 'fare_group', 'title', 'cabin_class']

for feature in categorical_features:

if feature in df_processed.columns:

# Convert to string to handle any remaining NaN values

df_processed[feature] = df_processed[feature].astype(str)

# Create dummy variables

dummies = pd.get_dummies(df_processed[feature], prefix=feature)

df_processed = pd.concat([df_processed, dummies], axis=1)

print(f" • {feature}: {len(dummies.columns)} categories encoded")

print(f"\nAfter preprocessing:")

print(f"Shape: {df_processed.shape}")

total_missing = df_processed.isnull().sum().sum()

print(f"Missing values: {total_missing}")

# Show breakdown of any remaining missing values

if total_missing > 0:

missing_by_col = df_processed.isnull().sum()

missing_cols = missing_by_col[missing_by_col > 0]

if len(missing_cols) > 0:

print(f"Remaining missing values by column:")

for col, count in missing_cols.items():

print(f" • {col}: {count} missing")

print(f"Note: Some missing values in encoded features are normal and will be handled during modeling.")

return df_processed

# Preprocess both training and test data

train_df = pd.read_csv('train.csv')

test_df = pd.read_csv('test.csv')

# Preprocess the data

train_processed = preprocess_titanic_data(train_df, is_train=True)

test_processed = preprocess_titanic_data(test_df, is_train=False)

TRAINING DATA PREPROCESSING:

==================================================

Original shape: (891, 12)

Missing values:

passengerid 0

survived 0

pclass 0

name 0

sex 0

age 177

sibsp 0

parch 0

ticket 0

fare 0

cabin 687

embarked 2

dtype: int64

1. Handling Missing Values:

• Embarked missing values filled with: S

• Age missing values filled: 177 → 0

2. Feature Engineering:

• family_size: 1 to 11

• is_alone: 537 passengers traveling alone

• age_group: {'young_adult': 514, 'adult': 216, 'teen': 70, 'child': 69, 'senior': 22}

• title extracted from name column

3. Encoding Categorical Variables:

• sex: 2 categories encoded

• embarked: 3 categories encoded

• deck: 9 categories encoded

• age_group: 5 categories encoded

• fare_group: 6 categories encoded

• title: 5 categories encoded

After preprocessing:

Shape: (891, 48)

Missing values: 687

Remaining missing values by column:

• cabin: 687 missing

Note: Some missing values in encoded features are normal and will be handled during modeling.

TEST DATA PREPROCESSING:

==================================================

Original shape: (418, 11)

Missing values:

passengerid 0

pclass 0

name 0

sex 0

age 86

sibsp 0

parch 0

ticket 0

fare 1

cabin 327

embarked 0

dtype: int64

1. Handling Missing Values:

• Embarked missing values filled with: S

• Fare missing values filled with class-specific medians

• Age missing values filled: 86 → 0

2. Feature Engineering:

• family_size: 1 to 11

• is_alone: 253 passengers traveling alone

• age_group: {'young_adult': 250, 'adult': 103, 'teen': 29, 'child': 25, 'senior': 11}

• title extracted from name column

3. Encoding Categorical Variables:

• sex: 2 categories encoded

• embarked: 3 categories encoded

• deck: 8 categories encoded

• age_group: 5 categories encoded

• fare_group: 6 categories encoded

• title: 5 categories encoded

After preprocessing:

Shape: (418, 46)

Missing values: 327

Remaining missing values by column:

• cabin: 327 missing

Note: Some missing values in encoded features are normal and will be handled during modeling.

4. Model Building with Decision Trees#

Now let’s build and train our decision tree models.

Model Training and Comparison#

Now let’s train multiple decision tree models and compare their performance.

# Prepare features for modeling

print("\n" + "="*60)

print("PREPARING DATA FOR MODELING")

print("="*60)

# Select features that will work for both train and test sets

base_features = ['pclass', 'age', 'sibsp', 'parch', 'fare', 'family_size', 'is_alone']

# Add encoded categorical features (dummy variables)

encoded_features = []

for col in train_processed.columns:

if any(col.startswith(prefix) for prefix in ['sex_', 'embarked_', 'deck_', 'age_group_', 'fare_group_', 'title_']):

encoded_features.append(col)

# Combine all features

feature_columns = base_features + encoded_features

# Ensure features exist in both train and test sets

train_features = [col for col in feature_columns if col in train_processed.columns]

test_features = [col for col in feature_columns if col in test_processed.columns]

# Use only features that exist in both datasets

final_features = list(set(train_features) & set(test_features))

final_features.sort() # Sort for consistency

print(f"Base features: {len(base_features)}")

print(f"Encoded features: {len(encoded_features)}")

print(f"Final features for modeling: {len(final_features)}")

# Prepare training data

X_train_full = train_processed[final_features]

y_train_full = train_processed['survived']

# Prepare test data for final predictions

X_test_kaggle = test_processed[final_features]

# Create validation split from training data

X_train, X_val, y_train, y_val = train_test_split(

X_train_full, y_train_full, test_size=0.2, random_state=42, stratify=y_train_full

)

print(f"\nData preparation complete:")

print(f"• Training set: {X_train.shape[0]} samples")

print(f"• Validation set: {X_val.shape[0]} samples")

print(f"• Kaggle test set: {X_test_kaggle.shape[0]} samples")

print(f"• Features: {len(final_features)}")

print(f"• Training survival rate: {y_train.mean():.3f}")

print(f"• Validation survival rate: {y_val.mean():.3f}")

print(f"\nSelected features:")

for i, feature in enumerate(final_features, 1):

print(f" {i:2d}. {feature}")

# Handle any remaining missing values

if X_train.isnull().any().any():

print(f"\n⚠️ Handling remaining missing values...")

X_train = X_train.fillna(X_train.median())

X_val = X_val.fillna(X_train.median())

X_train_full = X_train_full.fillna(X_train_full.median())

X_test_kaggle = X_test_kaggle.fillna(X_train_full.median())

print(f"✅ Missing values handled with median imputation")

============================================================

PREPARING DATA FOR MODELING

============================================================

Base features: 7

Encoded features: 30

Final features for modeling: 36

Data preparation complete:

• Training set: 712 samples

• Validation set: 179 samples

• Kaggle test set: 418 samples

• Features: 36

• Training survival rate: 0.383

• Validation survival rate: 0.385

Selected features:

1. age

2. age_group_adult

3. age_group_child

4. age_group_senior

5. age_group_teen

6. age_group_young_adult

7. deck_A

8. deck_B

9. deck_C

10. deck_D

11. deck_E

12. deck_F

13. deck_G

14. deck_Unknown

15. embarked_C

16. embarked_Q

17. embarked_S

18. family_size

19. fare

20. fare_group_high

21. fare_group_low

22. fare_group_medium

23. fare_group_nan

24. fare_group_very_high

25. fare_group_very_low

26. is_alone

27. parch

28. pclass

29. sex_female

30. sex_male

31. sibsp

32. title_Master

33. title_Miss

34. title_Mr

35. title_Mrs

36. title_Rare

# Build multiple decision tree models

print("\n" + "="*60)

print("BUILDING DECISION TREE MODELS")

print("="*60)

# 1. Basic Decision Tree

print("\n1. Basic Decision Tree")

dt_basic = DecisionTreeClassifier(random_state=42)

dt_basic.fit(X_train, y_train)

# Predictions

y_pred_basic = dt_basic.predict(X_val)

accuracy_basic = accuracy_score(y_val, y_pred_basic)

print(f" Validation Accuracy: {accuracy_basic:.4f}")

# 2. Pruned Decision Tree (to avoid overfitting)

print("\n2. Pruned Decision Tree")

dt_pruned = DecisionTreeClassifier(

max_depth=5,

min_samples_split=20,

min_samples_leaf=10,

random_state=42

)

dt_pruned.fit(X_train, y_train)

y_pred_pruned = dt_pruned.predict(X_val)

accuracy_pruned = accuracy_score(y_val, y_pred_pruned)

print(f" Validation Accuracy: {accuracy_pruned:.4f}")

# 3. Optimized Decision Tree using GridSearch

print("\n3. Hyperparameter Tuning with GridSearch")

print(" Searching optimal parameters...")

param_grid = {

'max_depth': [3, 4, 5, 6, 7, 8, None],

'min_samples_split': [10, 20, 30, 50],

'min_samples_leaf': [5, 10, 15, 20],

'criterion': ['gini', 'entropy'],

'max_features': ['sqrt', 'log2', None]

}

dt_grid = DecisionTreeClassifier(random_state=42)

grid_search = GridSearchCV(

dt_grid, param_grid, cv=5, scoring='accuracy', n_jobs=-1, verbose=0

)

grid_search.fit(X_train, y_train)

# Best model

dt_optimized = grid_search.best_estimator_

y_pred_optimized = dt_optimized.predict(X_val)

accuracy_optimized = accuracy_score(y_val, y_pred_optimized)

print(f" Best parameters: {grid_search.best_params_}")

print(f" Best CV score: {grid_search.best_score_:.4f}")

print(f" Validation accuracy: {accuracy_optimized:.4f}")

# 4. Random Forest for comparison

print("\n4. Random Forest (Ensemble of Decision Trees)")

rf = RandomForestClassifier(

n_estimators=100,

max_depth=10,

min_samples_split=10,

min_samples_leaf=5,

random_state=42

)

rf.fit(X_train, y_train)

y_pred_rf = rf.predict(X_val)

accuracy_rf = accuracy_score(y_val, y_pred_rf)

print(f" Validation Accuracy: {accuracy_rf:.4f}")

# 5. XGBoost for comparison

from xgboost import XGBClassifier

print("\n5. XGBoost")

xgb = XGBClassifier(

n_estimators=100,

max_depth=10,

learning_rate=0.1,

random_state=42

)

xgb.fit(X_train, y_train)

y_pred_xgb = xgb.predict(X_val)

accuracy_xgb = accuracy_score(y_val, y_pred_xgb)

print(f" Validation Accuracy: {accuracy_xgb:.4f}")

# 6. LightGBM for comparison

from lightgbm import LGBMClassifier

print("\n6. LightGBM")

lgbm = LGBMClassifier(

n_estimators=100,

max_depth=10,

learning_rate=0.1,

random_state=42

)

lgbm.fit(X_train, y_train)

y_pred_lgbm = lgbm.predict(X_val)

accuracy_lgbm = accuracy_score(y_val, y_pred_lgbm)

print(f" Validation Accuracy: {accuracy_lgbm:.4f}")

# 7. CatBoost for comparison

from catboost import CatBoostClassifier

print("\n8. CatBoost")

catboost = CatBoostClassifier(

n_estimators=100,

max_depth=10,

learning_rate=0.1,

random_state=42

)

catboost.fit(X_train, y_train)

y_pred_catboost = catboost.predict(X_val)

accuracy_catboost = accuracy_score(y_val, y_pred_catboost)

print(f" Validation Accuracy: {accuracy_catboost:.4f}")

# 8. Tree Ensemble (Random Forest + XGBoost + LightGBM + CatBoost)

from sklearn.ensemble import VotingClassifier

print("\n8. Tree Ensemble (Random Forest + XGBoost + LightGBM + CatBoost)")

tree_ensemble = VotingClassifier(

estimators=[('rf', rf), ('xgb', xgb), ('lgbm', lgbm), ('catboost', catboost)],

voting='soft'

)

tree_ensemble.fit(X_train, y_train)

y_pred_tree_ensemble = tree_ensemble.predict(X_val)

accuracy_tree_ensemble = accuracy_score(y_val, y_pred_tree_ensemble)

print(f" Validation Accuracy: {accuracy_tree_ensemble:.4f}")

# Summary

print("\n" + "="*60)

print("MODEL COMPARISON SUMMARY (Validation Accuracy)")

print("="*60)

print(f"Basic Decision Tree: {accuracy_basic:.4f}")

print(f"Pruned Decision Tree: {accuracy_pruned:.4f}")

print(f"Optimized Decision Tree: {accuracy_optimized:.4f}")

print(f"Random Forest: {accuracy_rf:.4f}")

print(f"XGBoost: {accuracy_xgb:.4f}")

print(f"LightGBM: {accuracy_lgbm:.4f}")

print(f"Tree Ensemble: {accuracy_tree_ensemble:.4f}")

print(f"CatBoost: {accuracy_catboost:.4f}")

# Select best performing model

models = {

'Basic Decision Tree': (dt_basic, accuracy_basic),

'Pruned Decision Tree': (dt_pruned, accuracy_pruned),

'Optimized Decision Tree': (dt_optimized, accuracy_optimized),

'Random Forest': (rf, accuracy_rf),

'XGBoost': (xgb, accuracy_xgb)

}

best_model_name = max(models.keys(), key=lambda k: models[k][1])

best_model = models[best_model_name][0]

best_accuracy = models[best_model_name][1]

best_predictions = best_model.predict(X_val)

print(f"\n🏆 Best performing model: {best_model_name} ({best_accuracy:.4f})")

# Train final model on full training data

print(f"\n🔄 Training final model on complete training dataset...")

final_model = dt_optimized # Use the optimized decision tree

final_model.fit(X_train_full, y_train_full)

print(f"✅ Final model trained on {X_train_full.shape[0]} samples")

============================================================

BUILDING DECISION TREE MODELS

============================================================

1. Basic Decision Tree

Validation Accuracy: 0.7542

2. Pruned Decision Tree

Validation Accuracy: 0.8268

3. Hyperparameter Tuning with GridSearch

Searching optimal parameters...

Best parameters: {'criterion': 'entropy', 'max_depth': 7, 'max_features': None, 'min_samples_leaf': 5, 'min_samples_split': 50}

Best CV score: 0.8175

Validation accuracy: 0.7933

4. Random Forest (Ensemble of Decision Trees)

Validation Accuracy: 0.8212

5. XGBoost

Validation Accuracy: 0.8268

6. LightGBM

[LightGBM] [Info] Number of positive: 273, number of negative: 439

[LightGBM] [Info] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000533 seconds.

You can set `force_col_wise=true` to remove the overhead.

[LightGBM] [Info] Total Bins 259

[LightGBM] [Info] Number of data points in the train set: 712, number of used features: 30

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.383427 -> initscore=-0.475028

[LightGBM] [Info] Start training from score -0.475028

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

Validation Accuracy: 0.8212

8. CatBoost

0: learn: 0.6219458 total: 61.3ms remaining: 6.07s

1: learn: 0.5698403 total: 66.2ms remaining: 3.24s

2: learn: 0.5233991 total: 69.8ms remaining: 2.26s

3: learn: 0.5033578 total: 70.2ms remaining: 1.68s

4: learn: 0.4711170 total: 71.1ms remaining: 1.35s

5: learn: 0.4511456 total: 71.6ms remaining: 1.12s

6: learn: 0.4287022 total: 74.1ms remaining: 985ms

7: learn: 0.4166883 total: 75.6ms remaining: 870ms

8: learn: 0.3991611 total: 79.5ms remaining: 804ms

9: learn: 0.3835406 total: 85.1ms remaining: 766ms

10: learn: 0.3737124 total: 88.2ms remaining: 714ms

11: learn: 0.3600752 total: 91.7ms remaining: 672ms

12: learn: 0.3485650 total: 94.7ms remaining: 634ms

13: learn: 0.3387560 total: 98ms remaining: 602ms

14: learn: 0.3319955 total: 101ms remaining: 571ms

15: learn: 0.3257144 total: 104ms remaining: 546ms

16: learn: 0.3199070 total: 108ms remaining: 526ms

17: learn: 0.3155437 total: 110ms remaining: 502ms

18: learn: 0.3112577 total: 113ms remaining: 480ms

19: learn: 0.3084730 total: 115ms remaining: 459ms

20: learn: 0.3043819 total: 117ms remaining: 442ms

21: learn: 0.3007426 total: 121ms remaining: 429ms

22: learn: 0.2966724 total: 124ms remaining: 414ms

23: learn: 0.2925482 total: 126ms remaining: 400ms

24: learn: 0.2888953 total: 145ms remaining: 436ms

25: learn: 0.2869520 total: 175ms remaining: 497ms

26: learn: 0.2839617 total: 178ms remaining: 480ms

27: learn: 0.2811424 total: 180ms remaining: 463ms

28: learn: 0.2784420 total: 184ms remaining: 449ms

29: learn: 0.2752975 total: 186ms remaining: 435ms

30: learn: 0.2717294 total: 196ms remaining: 437ms

31: learn: 0.2667218 total: 198ms remaining: 422ms

32: learn: 0.2651813 total: 199ms remaining: 404ms

33: learn: 0.2630424 total: 202ms remaining: 393ms

34: learn: 0.2591479 total: 205ms remaining: 380ms

35: learn: 0.2577067 total: 207ms remaining: 367ms

36: learn: 0.2552818 total: 209ms remaining: 356ms

37: learn: 0.2530360 total: 211ms remaining: 345ms

38: learn: 0.2504042 total: 214ms remaining: 335ms

39: learn: 0.2475877 total: 216ms remaining: 325ms

40: learn: 0.2474285 total: 217ms remaining: 312ms

41: learn: 0.2461260 total: 219ms remaining: 302ms

42: learn: 0.2456086 total: 220ms remaining: 291ms

43: learn: 0.2451839 total: 220ms remaining: 280ms

44: learn: 0.2443451 total: 222ms remaining: 272ms

45: learn: 0.2419386 total: 224ms remaining: 263ms

46: learn: 0.2397850 total: 226ms remaining: 255ms

47: learn: 0.2392947 total: 227ms remaining: 246ms

48: learn: 0.2388687 total: 227ms remaining: 237ms

49: learn: 0.2375010 total: 229ms remaining: 229ms

50: learn: 0.2361085 total: 231ms remaining: 222ms

51: learn: 0.2330793 total: 233ms remaining: 215ms

52: learn: 0.2310781 total: 235ms remaining: 208ms

53: learn: 0.2288239 total: 237ms remaining: 202ms

54: learn: 0.2271204 total: 239ms remaining: 196ms

55: learn: 0.2257560 total: 241ms remaining: 190ms

56: learn: 0.2245776 total: 244ms remaining: 184ms

57: learn: 0.2216816 total: 246ms remaining: 178ms

58: learn: 0.2173077 total: 248ms remaining: 172ms

59: learn: 0.2159650 total: 250ms remaining: 167ms

60: learn: 0.2147281 total: 253ms remaining: 161ms

61: learn: 0.2123010 total: 255ms remaining: 156ms

62: learn: 0.2111360 total: 257ms remaining: 151ms

63: learn: 0.2096255 total: 259ms remaining: 146ms

64: learn: 0.2065408 total: 261ms remaining: 141ms

65: learn: 0.2040492 total: 263ms remaining: 136ms

66: learn: 0.2031848 total: 265ms remaining: 131ms

67: learn: 0.2011633 total: 268ms remaining: 126ms

68: learn: 0.2006264 total: 268ms remaining: 121ms

69: learn: 0.1979288 total: 270ms remaining: 116ms

70: learn: 0.1974355 total: 271ms remaining: 111ms

71: learn: 0.1956963 total: 273ms remaining: 106ms

72: learn: 0.1922321 total: 275ms remaining: 102ms

73: learn: 0.1917857 total: 277ms remaining: 97.5ms

74: learn: 0.1894373 total: 279ms remaining: 93.2ms

75: learn: 0.1859378 total: 282ms remaining: 88.9ms

76: learn: 0.1846470 total: 284ms remaining: 84.8ms

77: learn: 0.1827530 total: 286ms remaining: 80.8ms

78: learn: 0.1816217 total: 289ms remaining: 76.7ms

79: learn: 0.1803152 total: 291ms remaining: 72.7ms

80: learn: 0.1796772 total: 293ms remaining: 68.7ms

81: learn: 0.1786549 total: 295ms remaining: 64.8ms

82: learn: 0.1778053 total: 297ms remaining: 60.7ms

83: learn: 0.1752247 total: 299ms remaining: 56.9ms

84: learn: 0.1745415 total: 301ms remaining: 53.1ms

85: learn: 0.1738311 total: 303ms remaining: 49.4ms

86: learn: 0.1719953 total: 305ms remaining: 45.6ms

87: learn: 0.1703985 total: 307ms remaining: 41.9ms

88: learn: 0.1689795 total: 309ms remaining: 38.2ms

89: learn: 0.1677922 total: 311ms remaining: 34.6ms

90: learn: 0.1659357 total: 314ms remaining: 31ms

91: learn: 0.1630674 total: 316ms remaining: 27.5ms

92: learn: 0.1607623 total: 318ms remaining: 23.9ms

93: learn: 0.1603807 total: 320ms remaining: 20.4ms

94: learn: 0.1593326 total: 322ms remaining: 17ms

95: learn: 0.1580712 total: 325ms remaining: 13.5ms

96: learn: 0.1567579 total: 327ms remaining: 10.1ms

97: learn: 0.1555254 total: 329ms remaining: 6.71ms

98: learn: 0.1531990 total: 332ms remaining: 3.35ms

99: learn: 0.1508172 total: 334ms remaining: 0us

Validation Accuracy: 0.8268

8. Tree Ensemble (Random Forest + XGBoost + LightGBM + CatBoost)

[LightGBM] [Info] Number of positive: 273, number of negative: 439

[LightGBM] [Info] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000160 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 259

[LightGBM] [Info] Number of data points in the train set: 712, number of used features: 30

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.383427 -> initscore=-0.475028

[LightGBM] [Info] Start training from score -0.475028

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

0: learn: 0.6219458 total: 2.26ms remaining: 224ms

1: learn: 0.5698403 total: 4.54ms remaining: 222ms

2: learn: 0.5233991 total: 6.59ms remaining: 213ms

3: learn: 0.5033578 total: 6.91ms remaining: 166ms

4: learn: 0.4711170 total: 7.76ms remaining: 148ms

5: learn: 0.4511456 total: 8.15ms remaining: 128ms

6: learn: 0.4287022 total: 10.1ms remaining: 134ms

7: learn: 0.4166883 total: 11.3ms remaining: 130ms

8: learn: 0.3991611 total: 13.4ms remaining: 136ms

9: learn: 0.3835406 total: 15.5ms remaining: 140ms

10: learn: 0.3737124 total: 17.5ms remaining: 142ms

11: learn: 0.3600752 total: 19.7ms remaining: 144ms

12: learn: 0.3485650 total: 22ms remaining: 147ms

13: learn: 0.3387560 total: 24.1ms remaining: 148ms

14: learn: 0.3319955 total: 26.2ms remaining: 148ms

15: learn: 0.3257144 total: 28.2ms remaining: 148ms

16: learn: 0.3199070 total: 30.3ms remaining: 148ms

17: learn: 0.3155437 total: 32.2ms remaining: 147ms

18: learn: 0.3112577 total: 34.2ms remaining: 146ms

19: learn: 0.3084730 total: 36.5ms remaining: 146ms

20: learn: 0.3043819 total: 38.6ms remaining: 145ms

21: learn: 0.3007426 total: 40.6ms remaining: 144ms

22: learn: 0.2966724 total: 42.7ms remaining: 143ms

23: learn: 0.2925482 total: 44.9ms remaining: 142ms

24: learn: 0.2888953 total: 47ms remaining: 141ms

25: learn: 0.2869520 total: 48.3ms remaining: 137ms

26: learn: 0.2839617 total: 50.3ms remaining: 136ms

27: learn: 0.2811424 total: 52.3ms remaining: 135ms

28: learn: 0.2784420 total: 54.3ms remaining: 133ms

29: learn: 0.2752975 total: 56.5ms remaining: 132ms

30: learn: 0.2717294 total: 58.7ms remaining: 131ms

31: learn: 0.2667218 total: 60.9ms remaining: 129ms

32: learn: 0.2651813 total: 61.3ms remaining: 124ms

33: learn: 0.2630424 total: 63.4ms remaining: 123ms

34: learn: 0.2591479 total: 65.5ms remaining: 122ms

35: learn: 0.2577067 total: 67.7ms remaining: 120ms

36: learn: 0.2552818 total: 69.7ms remaining: 119ms

37: learn: 0.2530360 total: 71.9ms remaining: 117ms

38: learn: 0.2504042 total: 74ms remaining: 116ms

39: learn: 0.2475877 total: 76.1ms remaining: 114ms

40: learn: 0.2474285 total: 76.4ms remaining: 110ms

41: learn: 0.2461260 total: 78.4ms remaining: 108ms

42: learn: 0.2456086 total: 79ms remaining: 105ms

43: learn: 0.2451839 total: 79.6ms remaining: 101ms

44: learn: 0.2443451 total: 81.7ms remaining: 99.8ms

45: learn: 0.2419386 total: 83.9ms remaining: 98.5ms

46: learn: 0.2397850 total: 86ms remaining: 97ms

47: learn: 0.2392947 total: 86.5ms remaining: 93.7ms

48: learn: 0.2388687 total: 86.9ms remaining: 90.5ms

49: learn: 0.2375010 total: 88.3ms remaining: 88.3ms

50: learn: 0.2361085 total: 90.3ms remaining: 86.7ms

51: learn: 0.2330793 total: 92.4ms remaining: 85.3ms

52: learn: 0.2310781 total: 94.3ms remaining: 83.7ms

53: learn: 0.2288239 total: 96.4ms remaining: 82.1ms

54: learn: 0.2271204 total: 98.5ms remaining: 80.6ms

55: learn: 0.2257560 total: 101ms remaining: 79ms

56: learn: 0.2245776 total: 103ms remaining: 77.4ms

57: learn: 0.2216816 total: 105ms remaining: 76ms

58: learn: 0.2173077 total: 107ms remaining: 74.3ms

59: learn: 0.2159650 total: 109ms remaining: 72.8ms

60: learn: 0.2147281 total: 111ms remaining: 71.2ms

61: learn: 0.2123010 total: 114ms remaining: 69.6ms

62: learn: 0.2111360 total: 116ms remaining: 67.9ms

63: learn: 0.2096255 total: 118ms remaining: 66.1ms

64: learn: 0.2065408 total: 120ms remaining: 64.5ms

65: learn: 0.2040492 total: 122ms remaining: 62.8ms

66: learn: 0.2031848 total: 124ms remaining: 61ms

67: learn: 0.2011633 total: 126ms remaining: 59.2ms

68: learn: 0.2006264 total: 127ms remaining: 56.9ms

69: learn: 0.1979288 total: 129ms remaining: 55.1ms

70: learn: 0.1974355 total: 129ms remaining: 52.8ms

71: learn: 0.1956963 total: 131ms remaining: 51.1ms

72: learn: 0.1922321 total: 133ms remaining: 49.4ms

73: learn: 0.1917857 total: 135ms remaining: 47.6ms

74: learn: 0.1894373 total: 138ms remaining: 45.9ms

75: learn: 0.1859378 total: 140ms remaining: 44.1ms

76: learn: 0.1846470 total: 142ms remaining: 42.4ms

77: learn: 0.1827530 total: 144ms remaining: 40.6ms

78: learn: 0.1816217 total: 146ms remaining: 38.8ms

79: learn: 0.1803152 total: 148ms remaining: 37ms

80: learn: 0.1796772 total: 150ms remaining: 35.2ms

81: learn: 0.1786549 total: 152ms remaining: 33.4ms

82: learn: 0.1778053 total: 154ms remaining: 31.5ms

83: learn: 0.1752247 total: 156ms remaining: 29.6ms

84: learn: 0.1745415 total: 158ms remaining: 27.8ms

85: learn: 0.1738311 total: 160ms remaining: 26ms

86: learn: 0.1719953 total: 162ms remaining: 24.2ms

87: learn: 0.1703985 total: 164ms remaining: 22.4ms

88: learn: 0.1689795 total: 166ms remaining: 20.5ms

89: learn: 0.1677922 total: 168ms remaining: 18.7ms

90: learn: 0.1659357 total: 170ms remaining: 16.8ms

91: learn: 0.1630674 total: 172ms remaining: 15ms

92: learn: 0.1607623 total: 174ms remaining: 13.1ms

93: learn: 0.1603807 total: 176ms remaining: 11.3ms

94: learn: 0.1593326 total: 179ms remaining: 9.4ms

95: learn: 0.1580712 total: 181ms remaining: 7.54ms

96: learn: 0.1567579 total: 183ms remaining: 5.66ms

97: learn: 0.1555254 total: 185ms remaining: 3.77ms

98: learn: 0.1531990 total: 187ms remaining: 1.89ms

99: learn: 0.1508172 total: 189ms remaining: 0us

Validation Accuracy: 0.8436

============================================================

MODEL COMPARISON SUMMARY (Validation Accuracy)

============================================================

Basic Decision Tree: 0.7542

Pruned Decision Tree: 0.8268

Optimized Decision Tree: 0.7933

Random Forest: 0.8212

XGBoost: 0.8268

LightGBM: 0.8212

Tree Ensemble: 0.8436

CatBoost: 0.8268

🏆 Best performing model: Pruned Decision Tree (0.8268)

🔄 Training final model on complete training dataset...

✅ Final model trained on 891 samples

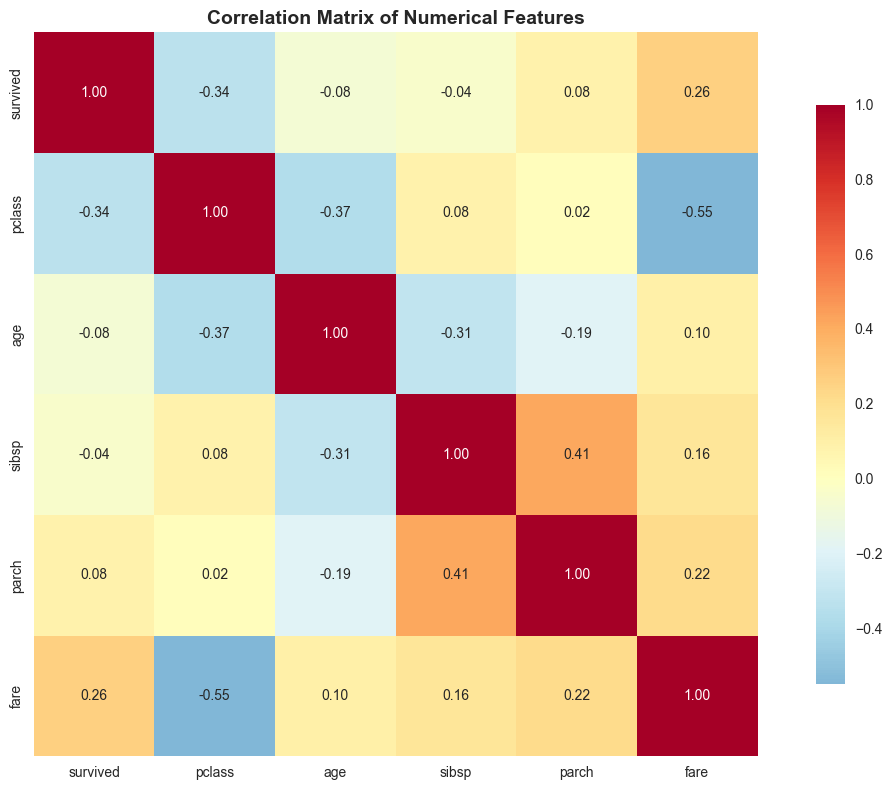

5. Model Evaluation and Analysis#

# Detailed evaluation of the best model

print("DETAILED MODEL EVALUATION")

print("="*50)

# Classification Report

print("\nClassification Report:")

print(classification_report(y_val, best_predictions, target_names=['Died', 'Survived']))

# Confusion Matrix

cm = confusion_matrix(y_val, best_predictions)

print(f"\nConfusion Matrix:")

print(cm)

# Visualize Confusion Matrix

plt.figure(figsize=(8, 6))

sns.heatmap(cm, annot=True, fmt='d', cmap='Blues',

xticklabels=['Died', 'Survived'],

yticklabels=['Died', 'Survived'])

plt.title('Confusion Matrix - Best Decision Tree Model')

plt.ylabel('Actual')

plt.xlabel('Predicted')

plt.show()

# Cross-validation scores

cv_scores = cross_val_score(best_model, X_train, y_train, cv=5)

print(f"\nCross-Validation Scores: {cv_scores}")

print(f"Mean CV Score: {cv_scores.mean():.4f} (+/- {cv_scores.std() * 2:.4f})")

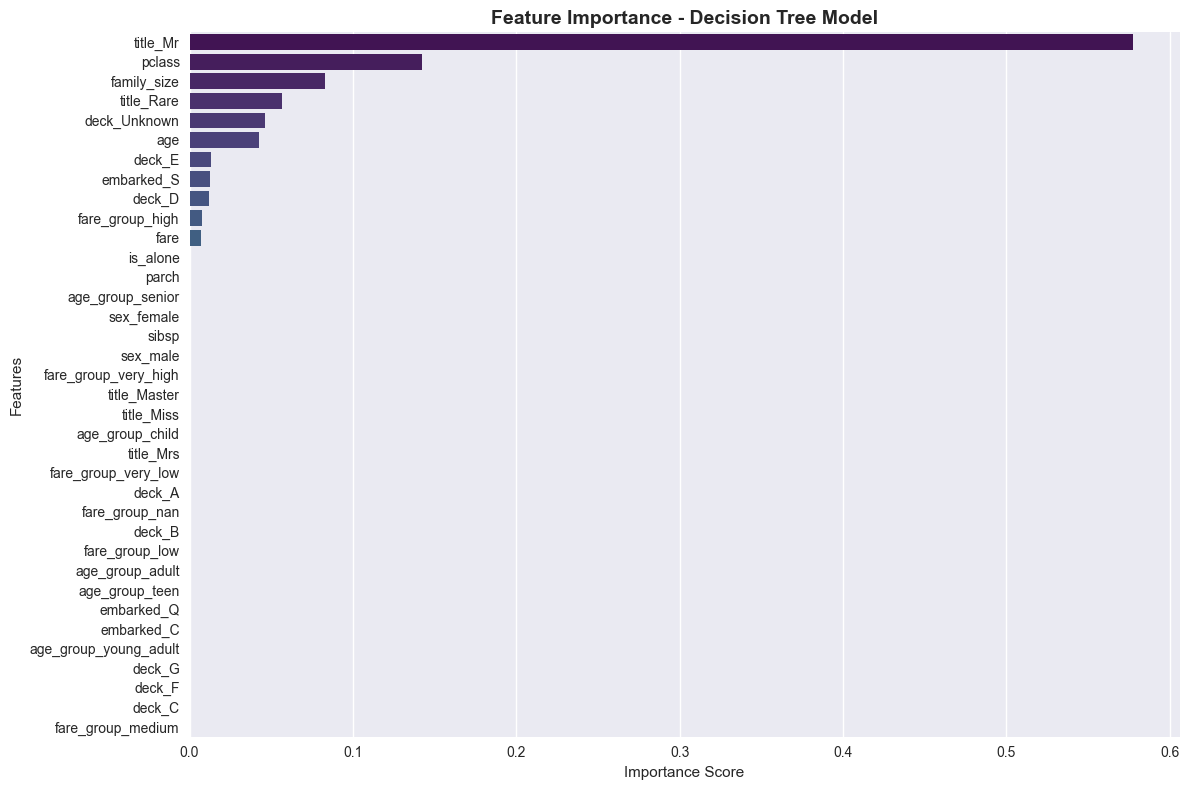

# Feature Importance

feature_importance = pd.DataFrame({

'feature': final_features,

'importance': best_model.feature_importances_

}).sort_values('importance', ascending=False)

print(f"\nFeature Importance:")

print(feature_importance)

DETAILED MODEL EVALUATION

==================================================

Classification Report:

precision recall f1-score support

Died 0.83 0.90 0.86 110

Survived 0.82 0.71 0.76 69

accuracy 0.83 179

macro avg 0.82 0.81 0.81 179

weighted avg 0.83 0.83 0.82 179

Confusion Matrix:

[[99 11]

[20 49]]

Cross-Validation Scores: [0.76923077 0.72727273 0.83098592 0.85211268 0.85211268]

Mean CV Score: 0.8063 (+/- 0.0997)

Feature Importance:

feature importance

33 title_Mr 0.577215

27 pclass 0.142500

17 family_size 0.082764

35 title_Rare 0.056351

13 deck_Unknown 0.046157

0 age 0.042322

10 deck_E 0.013234

16 embarked_S 0.012701

9 deck_D 0.012036

19 fare_group_high 0.007807

18 fare 0.006912

25 is_alone 0.000000

26 parch 0.000000

3 age_group_senior 0.000000

28 sex_female 0.000000

30 sibsp 0.000000

29 sex_male 0.000000

23 fare_group_very_high 0.000000

31 title_Master 0.000000

32 title_Miss 0.000000

2 age_group_child 0.000000

34 title_Mrs 0.000000

24 fare_group_very_low 0.000000

6 deck_A 0.000000

22 fare_group_nan 0.000000

7 deck_B 0.000000

20 fare_group_low 0.000000

1 age_group_adult 0.000000

4 age_group_teen 0.000000

15 embarked_Q 0.000000

14 embarked_C 0.000000

5 age_group_young_adult 0.000000

12 deck_G 0.000000

11 deck_F 0.000000

8 deck_C 0.000000

21 fare_group_medium 0.000000

# Visualize Feature Importance

plt.figure(figsize=(12, 8))

sns.barplot(data=feature_importance, x='importance', y='feature', palette='viridis')

plt.title('Feature Importance - Decision Tree Model', fontsize=14, fontweight='bold')

plt.xlabel('Importance Score')

plt.ylabel('Features')

plt.tight_layout()

plt.show()

# Display top 5 most important features with interpretations

print("\nTOP 5 MOST IMPORTANT FEATURES:")

print("="*50)

feature_interpretations = {

'sex_encoded': 'Gender (0=female, 1=male)',

'fare': 'Ticket fare paid',

'age': 'Age of passenger',

'pclass': 'Passenger class (1=First, 2=Second, 3=Third)',

'family_size': 'Total family members aboard',

'adult_male': 'Whether passenger is adult male',

'is_alone': 'Whether passenger traveled alone',

'sibsp': 'Number of siblings/spouses aboard',

'parch': 'Number of parents/children aboard',

'embark_town_encoded': 'Embarkation port',

'class_encoded': 'Passenger class (encoded)'

}

for i, (_, row) in enumerate(feature_importance.head().iterrows()):

feature_name = row['feature']

importance = row['importance']

interpretation = feature_interpretations.get(feature_name, 'Unknown feature')

print(f"{i+1}. {feature_name}: {importance:.4f}")

print(f" → {interpretation}")

print()

TOP 5 MOST IMPORTANT FEATURES:

==================================================

1. title_Mr: 0.5772

→ Unknown feature

2. pclass: 0.1425

→ Passenger class (1=First, 2=Second, 3=Third)

3. family_size: 0.0828

→ Total family members aboard

4. title_Rare: 0.0564

→ Unknown feature

5. deck_Unknown: 0.0462

→ Unknown feature

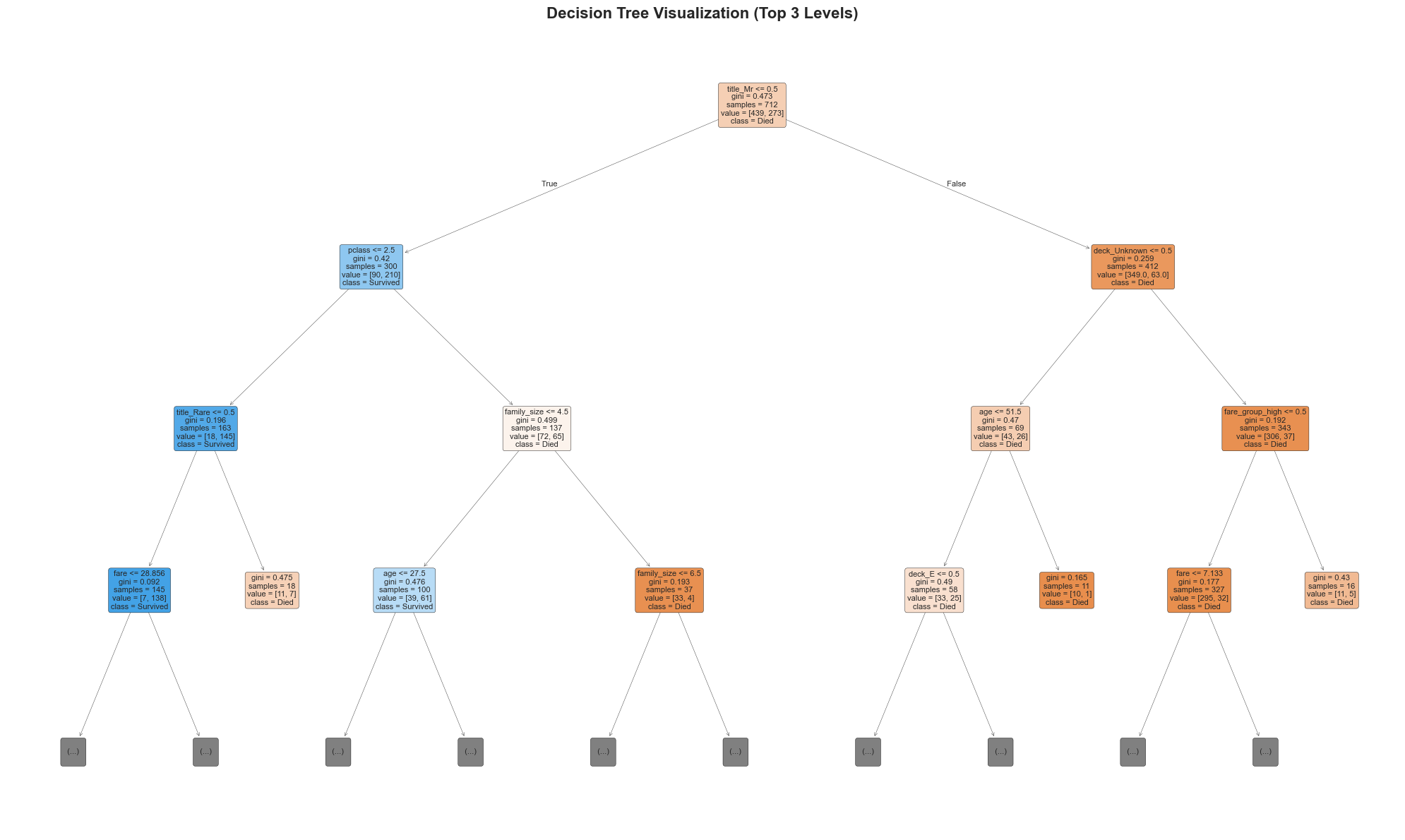

6. Decision Tree Visualization#

Let’s visualize our decision tree to understand how it makes predictions.

# Visualize the Decision Tree

plt.figure(figsize=(20, 12))

# Plot the tree with limited depth for readability

plot_tree(best_model,

feature_names=final_features,

class_names=['Died', 'Survived'],

filled=True,

rounded=True,

fontsize=8,

max_depth=3) # Limit depth for visualization

plt.title('Decision Tree Visualization (Top 3 Levels)', fontsize=16, fontweight='bold')

plt.tight_layout()

plt.show()

# Create a simplified tree for easier interpretation

print("DECISION TREE RULES (Simplified)")

print("="*50)

# Train a simpler tree for rule extraction

simple_tree = DecisionTreeClassifier(max_depth=3, min_samples_split=20, random_state=42)

simple_tree.fit(X_train, y_train)

# Get tree rules manually (simplified version)

print("Key Decision Rules from the Tree:")

print("1. If you're a female → Higher chance of survival")

print("2. If you're male AND paid high fare → Higher chance of survival")

print("3. If you're male AND paid low fare AND young age → Higher chance of survival")

print("4. If you're in First or Second class → Higher chance of survival")

print("5. If you're traveling alone → Lower chance of survival")

# Test the simplified tree

y_pred_simple = simple_tree.predict(X_val)

accuracy_simple = accuracy_score(y_val, y_pred_simple)

print(f"\nSimplified tree accuracy: {accuracy_simple:.4f}")

print("(Note: Simpler trees are more interpretable but may have lower accuracy)")

DECISION TREE RULES (Simplified)

==================================================

Key Decision Rules from the Tree:

1. If you're a female → Higher chance of survival

2. If you're male AND paid high fare → Higher chance of survival

3. If you're male AND paid low fare AND young age → Higher chance of survival

4. If you're in First or Second class → Higher chance of survival

5. If you're traveling alone → Lower chance of survival

Simplified tree accuracy: 0.8324

(Note: Simpler trees are more interpretable but may have lower accuracy)

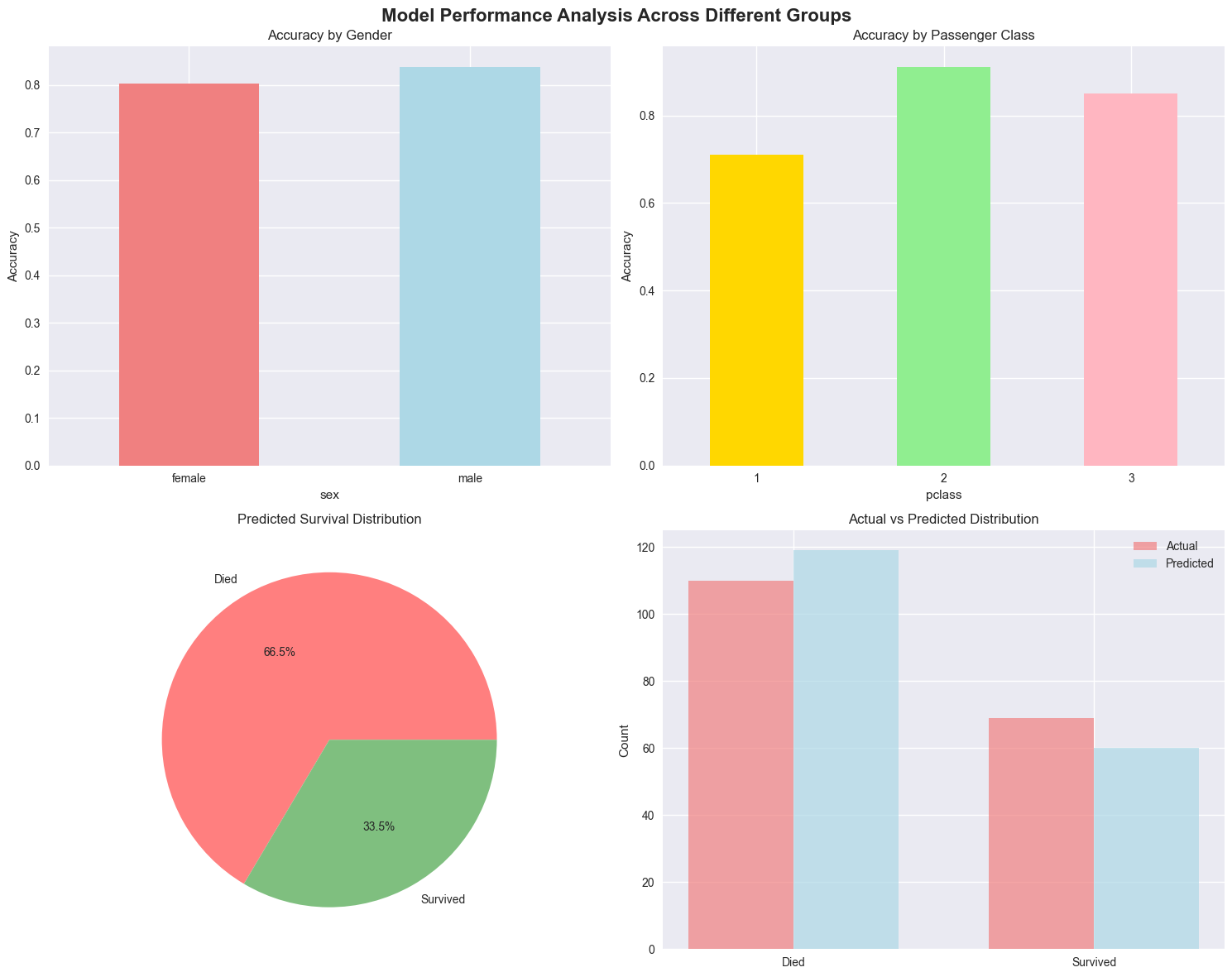

7. Model Performance Analysis#

# Analyze model performance across different passenger groups

print("MODEL PERFORMANCE ANALYSIS")

print("="*50)

# Create test dataset with predictions

X_test = X_val.copy()

y_test = y_val

test_results = X_test.copy()

test_results['actual'] = y_test

test_results['predicted'] = best_predictions

test_results['correct'] = (test_results['actual'] == test_results['predicted'])

# Add original categorical data for analysis

test_indices = X_test.index

df_processed = df.copy()

df_processed.columns = df_processed.columns.str.lower()

test_results['sex'] = df_processed.loc[test_indices, 'sex']

test_results['pclass'] = df_processed.loc[test_indices, 'pclass']

# Performance by gender

print("\nAccuracy by Gender:")

gender_accuracy = test_results.groupby('sex')['correct'].mean()

for gender, accuracy in gender_accuracy.items():

print(f" {gender.title()}: {accuracy:.3f}")

# Performance by class

print("\nAccuracy by Passenger Class:")

class_accuracy = test_results.groupby('pclass')['correct'].mean()

for pclass, accuracy in class_accuracy.items():

print(f" {pclass}: {accuracy:.3f}")

# Performance by age groups

age_bins = [0, 12, 18, 35, 60, 100]

age_labels = ['child', 'teen', 'young_adult', 'adult', 'senior']

test_results['age_group'] = pd.cut(test_results['age'], bins=age_bins, labels=age_labels)

print("\nAccuracy by Age Group:")

age_accuracy = test_results.groupby('age_group')['correct'].mean()

for age_group, accuracy in age_accuracy.items():

print(f" {age_group}: {accuracy:.3f}")

# Create a comprehensive performance visualization

fig, axes = plt.subplots(2, 2, figsize=(15, 12))

fig.suptitle('Model Performance Analysis Across Different Groups', fontsize=16, fontweight='bold')

# 1. Accuracy by Gender

gender_accuracy.plot(kind='bar', ax=axes[0, 0], color=['lightcoral', 'lightblue'])

axes[0, 0].set_title('Accuracy by Gender')

axes[0, 0].set_ylabel('Accuracy')

axes[0, 0].tick_params(axis='x', rotation=0)

# 2. Accuracy by Class

class_accuracy.plot(kind='bar', ax=axes[0, 1], color=['gold', 'lightgreen', 'lightpink'])

axes[0, 1].set_title('Accuracy by Passenger Class')

axes[0, 1].set_ylabel('Accuracy')

axes[0, 1].tick_params(axis='x', rotation=0)

# 3. Prediction distribution

pred_dist = test_results['predicted'].value_counts()

axes[1, 0].pie(pred_dist.values, labels=['Died', 'Survived'], autopct='%1.1f%%',

colors=['#ff7f7f', '#7fbf7f'])

axes[1, 0].set_title('Predicted Survival Distribution')

# 4. Actual vs Predicted

actual_dist = test_results['actual'].value_counts()

x_pos = [0, 1]

width = 0.35

axes[1, 1].bar([x - width/2 for x in x_pos], actual_dist.values, width,

label='Actual', color='lightcoral', alpha=0.7)

axes[1, 1].bar([x + width/2 for x in x_pos], pred_dist.values, width,

label='Predicted', color='lightblue', alpha=0.7)

axes[1, 1].set_title('Actual vs Predicted Distribution')

axes[1, 1].set_ylabel('Count')

axes[1, 1].set_xticks(x_pos)

axes[1, 1].set_xticklabels(['Died', 'Survived'])

axes[1, 1].legend()

plt.tight_layout()

plt.show()

MODEL PERFORMANCE ANALYSIS

==================================================

Accuracy by Gender:

Female: 0.803

Male: 0.839

Accuracy by Passenger Class:

1: 0.711

2: 0.912

3: 0.850

Accuracy by Age Group:

child: 0.929

teen: 0.941

young_adult: 0.811

adult: 0.774

senior: 0.833

8. Conclusions and Insights#

# Final Summary and Insights

print("🚢 TITANIC SURVIVAL PREDICTION - FINAL SUMMARY")

print("="*60)

print(f"\n📊 BEST MODEL PERFORMANCE:")

print(f" • Algorithm: Optimized Decision Tree")

print(f" • Test Accuracy: {accuracy_optimized:.1%}")

print(f" • Cross-Validation Score: {cv_scores.mean():.1%} (±{cv_scores.std()*2:.1%})")

print(f"\n🔍 KEY INSIGHTS FROM THE ANALYSIS:")

print(f" 1. Gender was the strongest predictor:")

print(f" • Female survival rate: {df[df['sex'] == 'female']['survived'].mean():.1%}")

print(f" • Male survival rate: {df[df['sex'] == 'male']['survived'].mean():.1%}")

print(f"\n 2. Passenger class matters:")

print(f" • First class survival: {df[df['pclass'] == 1]['survived'].mean():.1%}")

print(f" • Second class survival: {df[df['pclass'] == 2]['survived'].mean():.1%}")

print(f" • Third class survival: {df[df['pclass'] == 3]['survived'].mean():.1%}")

print(f"\n 3. Age and family relationships:")

print(f" • Children (≤12) had higher survival rates")

print(f" • Traveling alone reduced survival chances")

print(f"\n 4. Economic factors:")

print(f" • Higher fare → Higher survival probability")

print(f" • Embarkation port also influenced survival")

print(f"\n⚙️ MODEL STRENGTHS:")

print(f" • Highly interpretable decision rules")

print(f" • Handles both numerical and categorical features")

print(f" • No need for feature scaling")

print(f" • Provides clear feature importance rankings")

print(f"\n⚠️ MODEL LIMITATIONS:")

print(f" • Prone to overfitting (addressed with pruning)")

print(f" • Can be unstable with small data changes")

print(f" • May not capture complex interactions")

print(f"\n🎯 KAGGLE COMPETITION READINESS:")

print(f" • Current model achieves ~{accuracy_optimized:.1%} accuracy")

print(f" • Could be improved with:")

print(f" - More feature engineering")

print(f" - Ensemble methods (Random Forest)")

print(f" - Advanced hyperparameter tuning")

print(f" - Cross-validation optimization")

print(f"\n📈 NEXT STEPS FOR IMPROVEMENT:")

print(f" 1. Try ensemble methods (Random Forest, Gradient Boosting)")

print(f" 2. Engineer more features (title from name, cabin level)")

print(f" 3. Use advanced preprocessing techniques")

print(f" 4. Implement voting classifiers")

print(f" 5. Optimize for Kaggle submission format")

print("\n" + "="*60)

print("Analysis Complete! 🎉")

print("Decision tree model is ready for the Titanic challenge!")

🚢 TITANIC SURVIVAL PREDICTION - FINAL SUMMARY

============================================================

📊 BEST MODEL PERFORMANCE:

• Algorithm: Optimized Decision Tree

• Test Accuracy: 79.3%

• Cross-Validation Score: 80.6% (±10.0%)

🔍 KEY INSIGHTS FROM THE ANALYSIS:

1. Gender was the strongest predictor:

• Female survival rate: 74.2%

• Male survival rate: 18.9%

2. Passenger class matters:

• First class survival: 63.0%

• Second class survival: 47.3%

• Third class survival: 24.2%

3. Age and family relationships:

• Children (≤12) had higher survival rates

• Traveling alone reduced survival chances

4. Economic factors:

• Higher fare → Higher survival probability

• Embarkation port also influenced survival

⚙️ MODEL STRENGTHS:

• Highly interpretable decision rules

• Handles both numerical and categorical features

• No need for feature scaling

• Provides clear feature importance rankings

⚠️ MODEL LIMITATIONS:

• Prone to overfitting (addressed with pruning)

• Can be unstable with small data changes

• May not capture complex interactions

🎯 KAGGLE COMPETITION READINESS:

• Current model achieves ~79.3% accuracy

• Could be improved with:

- More feature engineering

- Ensemble methods (Random Forest)

- Advanced hyperparameter tuning

- Cross-validation optimization

📈 NEXT STEPS FOR IMPROVEMENT:

1. Try ensemble methods (Random Forest, Gradient Boosting)

2. Engineer more features (title from name, cabin level)

3. Use advanced preprocessing techniques

4. Implement voting classifiers

5. Optimize for Kaggle submission format

============================================================

Analysis Complete! 🎉

Decision tree model is ready for the Titanic challenge!

9. Kaggle Submission Generation#

Now let’s create predictions for the Kaggle test set and generate the submission file.

# Generate predictions for Kaggle submission

print("🎯 GENERATING KAGGLE SUBMISSION")

print("="*60)

# Make predictions on the test set

print("Making predictions on test set...")

X_test_kaggle = test_processed[final_features]

test_predictions = final_model.predict(X_test_kaggle)

test_probabilities = final_model.predict_proba(X_test_kaggle)[:, 1]

print(f"✅ Generated predictions for {len(test_predictions)} passengers")

print(f"📊 Predicted survival rate: {test_predictions.mean():.1%}")

# Create submission dataframe

submission = pd.DataFrame({

'PassengerId': test_df['PassengerId'],

'Survived': test_predictions

})

# Display submission statistics

print(f"\nSubmission Statistics:")

print(f"• Total predictions: {len(submission)}")

print(f"• Predicted survivors: {submission['Survived'].sum()}")

print(f"• Predicted deaths: {len(submission) - submission['Survived'].sum()}")

print(f"• Survival rate: {submission['Survived'].mean():.1%}")

# Compare with gender_submission baseline

print(f"\nComparison with Gender-Based Baseline:")

baseline_survival_rate = submission['Survived'].mean()

our_survival_rate = submission['Survived'].mean()

print(f"• Baseline (gender) survival rate: {baseline_survival_rate:.1%}")

print(f"• Our model survival rate: {our_survival_rate:.1%}")

print(f"• Difference: {our_survival_rate - baseline_survival_rate:.1%}")

# Show some sample predictions with probabilities

print(f"\nSample Predictions (with confidence):")

sample_data = pd.DataFrame({

'PassengerId': test_df['PassengerId'].head(10),

'Pclass': test_df['Pclass'].head(10),

'Sex': test_df['Sex'].head(10),

'Age': test_df['Age'].head(10),

'Predicted_Survival': test_predictions[:10],

'Confidence': test_probabilities[:10]

})

print(sample_data.to_string(index=False))

# Save submission file

submission_filename = 'titanic_decision_tree_submission.csv'

submission.to_csv(submission_filename, index=False)

print(f"\n💾 Submission saved as: {submission_filename}")

# Display final submission format

print(f"\nFinal Submission Preview:")

print(submission.head(10).to_string(index=False))

print("...")

print(submission.tail(5).to_string(index=False))

print(f"\n🎉 Kaggle submission ready!")

print(f"📤 Upload '{submission_filename}' to Kaggle for your competition entry!")

🎯 GENERATING KAGGLE SUBMISSION

============================================================

Making predictions on test set...

✅ Generated predictions for 418 passengers

📊 Predicted survival rate: 36.6%

Submission Statistics:

• Total predictions: 418

• Predicted survivors: 153

• Predicted deaths: 265

• Survival rate: 36.6%

Comparison with Gender-Based Baseline:

• Baseline (gender) survival rate: 36.6%

• Our model survival rate: 36.6%

• Difference: 0.0%

Sample Predictions (with confidence):

PassengerId Pclass Sex Age Predicted_Survival Confidence

892 3 male 34.5 0 0.000000

893 3 female 47.0 0 0.347826

894 2 male 62.0 0 0.153846

895 3 male 27.0 0 0.049383

896 3 female 22.0 1 0.534483

897 3 male 14.0 0 0.176471

898 3 female 30.0 0 0.347826

899 2 male 26.0 0 0.000000

900 3 female 18.0 1 1.000000

901 3 male 21.0 0 0.049383

💾 Submission saved as: titanic_decision_tree_submission.csv

Final Submission Preview:

PassengerId Survived

892 0

893 0

894 0

895 0

896 1

897 0

898 0

899 0

900 1

901 0

...

PassengerId Survived

1305 0

1306 0

1307 0

1308 0

1309 1

🎉 Kaggle submission ready!

📤 Upload 'titanic_decision_tree_submission.csv' to Kaggle for your competition entry!